To prove that you’re human on the internet, you’re often forced to play quirky games – spotting cats or identifying street signs among a tableau of random images. Captcha, the often irritating internet puzzle, has been the most common way sites screen out digital imposters on the web. But with the invasion of bots in our Presidential campaigns and elections, how long will it be before human and machine are indistinguishable?

The battle began in 1997, when early internet trolls set out to distort the algorithm of the AltaVista search engine. Request-spamming bots swamped servers with unwanted URL submissions, plaguing the system with erroneous results. Andrei Broder, then the company’s chief scientist, who later moved on to become a distinguished IBM engineer and Google scientist, responded by prototyping a new security protocol based on text-image recognition technology. A crack team at Carnegie Mellon led by Luis von Ahn later completed the work, dubbing it CAPTCHA (a backronym for “Completely Automated Public Turing test to tell Computers and Humans Apart”). And yes, Google purchased Dr. von Ahn’s startup and put Captcha to work.

From Captcha to ReCaptcha

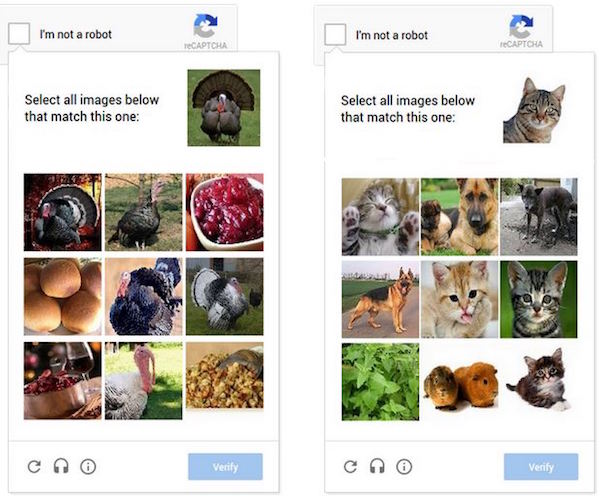

Captcha sought to keep out bots by installing a gate. Computers have always been a bit slow at differentiating text from an image – a relatively simple task for humans, but overly advanced for machines. It worked well for years, but eventually the more advanced image analysis programs, leveraging machine learning, caught up. That’s why most Captcha tests you see today are more complex, riddled with stray lines and distorted lettering. Captcha checkpoints were at the front line of the human vs. machine battle, safeguarding websites, databases, and users by blocking unwanted requests and spam from automated bots. Captcha generators proliferated on the web, providing independent developers a free security platform, and paving the way towards a massive, crowd-sourced effort to untangle words in old newspapers, magazines and texts. Google later employed a similar tactic to mobilize millions of users to work (for free) in improving its image tracking software by identifying streets signs and other elements critical to Google Maps.

But computers are becoming exponentially faster, and the old Captcha approaches are no longer state-of-the-art in this ongoing war. Under Google, the technology has grown up. With reCaptcha, Google’s open-source developer tool, all the user does is click into a small box. Oversimplified? Not at all. Instead of relying primarily on image recognition technology, reCaptcha examines your computer’s location, site history, your movements before you click, and other “human-like” behaviors to gauge whether you’re real. Experts believe that Google – with its years of experience in machine learning and AI research – is tapping many other secret big computing methods to improve reCaptcha, and so far it seems to be keeping out most of the bots from websites.

Why Bots Matter

With all this talk about bots, what are they, and why do they matter? Short for robots, these automated computer scripts emulate human tasks. Unlike humans, bots work tirelessly around the clock. Once programmed, the process is automatic and requires little supervision. Bots can also be controlled by humans en masse. This makes them convenient and potentially dangerous. Bots send out purchase confirmations, ping you when your favorite artist releases a new album, and offer tech support or account details. Chatbots are a version of bots infused with a modicum of AI to engage in limited online chats with humans. But it’s not always easy to distinguish a real person from a chatbot, and deception is common. Millions of people have been fooled into confusing bots with people, especially when enhanced by a thumbnail photo or name. Among the most well publicized chumps were thousands of cheating men on the illicit Ashley Madison dating site.

The thing is, bots dupe humans. Imagine this scenario: throughout your day, 50 different people on your social networks tell you about their cool new shoes and where they bought them. Before long, you’re Googling them and buying your own pair. But, it turns out, it was a marketing campaign by the manufacturer. Some of those people were actually bots. But this is a relatively benign, albeit sneaky, example. Today bots make up more than 50 percent of web traffic, providing a powerful weapon for anonymous entities to influence social media and the masses. The Russian government allegedly hired a room full of “professional trolls” to populate the Twittersphere with anti-Clinton tweets, mobilizing support for the pro-Trump brigade.

Bots, Politics, and Fake News

Next, “American-looking” Twitter bot accounts were randomly generated and tasked with commenting on or retweeting fake news. At a close glance, these tweets seemed flawed, coming from accounts with generic profiles and sloppy English. But it’s a numbers game. If 10,000 new profiles are generated every day, if even half of them appear remotely human, there’s a chain reaction. These bots retweet another bot’s post, and make the original “fake” post appear credible because of the “proof of popularity” – hundreds or thousands of retweets and comments. The result: The human Twitter user doesn’t suspect that the Clinton Scandal story was designed in Russia and pumped up by bots.

Social media is all about the energy and tension between popularity and supposed “authenticity.” Bots provide ammunition for social media because they can create a mushrooming crowd mentality that outpaces our human ability to gauge what’s real and what’s not. Misleading posts or links redirect traffic and goose the number of clicks or site visits.

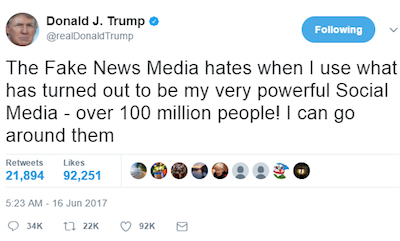

Marketing firms love bots, and see them as fantastic digital slaves to flog products and make money. Bots can also work much like celebrity rags, weaving rumors to drive interest. Celebrities and public personalities are bot pioneers. President Donald Trump fits both of these criteria perfectly so it’s no surprise that his Twitter account has been a magnet for bots. Newsweek recently reported that nearly half of Trump’s Twitter followers are not human, and that his increasingly rabid bot following has exploded in the past few months.

On paper, each @realDonaldTrump tweet reaches 32 million followers. That’s a lot of support and potential customers for companies pushing products and a President marketing the Office of the President. During the campaign, for instance, Twitter bots raced to respond to Donald Trump’s tweets. Simply appearing as a “first comment” attracted thousands of followers to a new account. Profit met politics, in a carefully designed campaign to artificially pump up Trump.

After a lot of hand wringing, humans are seeking to regain some semblance of control. Since the election, Twitter has changed how comments work, removing the motivation for bots to make the first comment, and working to ban such accounts. But there’s a central dilemma. Despite fresh efforts by Twitter and Facebook to cut down on fake tweets and fake posts, it’s a catch-if-you-can game. First, this effort may conflict with corporate business goals. Ease of use has been critical to the wild popularity of both platforms, and automation is key to that success. Put too many Captchas or hurdles before users, and some of them go away – and the overall value of the business suffers. And then there’s another fact of machine life. This is all a bit like a supersonic game of Whack-A-Mole. The bots are being churned out faster than they can be reviewed or banned.

Right now the machines seem to be winning.

For more on how tech and politics are colliding check out these Smartup.life stories: