What does Machine Learning look like? What’s Artificial General Intelligence all about? Theory met practice when Unity Technology’s Danny Lange took the stage recently at the BootstrapLabs Applied Artificial Intelligence conference to demonstrate step by step how the latest ML- and AI-empowered systems learn to teach themselves.

Up on the big screen Lange played a simple video game in which an animated chicken tries to cross a traffic-clogged road, while picking up gift packages. Predictably, at first, the chicken gets creamed, but within ten seconds the bird learns two things: picking up packages is good. Getting hit by a car is bad. After half an hour of training, it’s already outmaneuvering the cars pretty well, and scoring lots of packages. “After six hours of training from scratch, no cheating,” Lange says, the chicken behind him magically zipping between vehicles. “It gets really good at it.” The chicken can go on forever.

Danny Lange is a major figure in the booming world of Machine Learning and Artificial Intelligence. He leads a 100-person AI team at Unity, the dominant real-time 3D gaming company, with 50 to 60% of the gaming market share, an installed base of over 4 billion devices, 1.8 billion monthly active users and about 2,100 employees headquartered in San Francisco. Before this, he was head of ML at Uber and Amazon, following a stint at Microsoft and “building autonomous agents at IBM research.” For Lange, this storied career has been “not as much about the destination of AI” but an epic long-haul journey of learning.

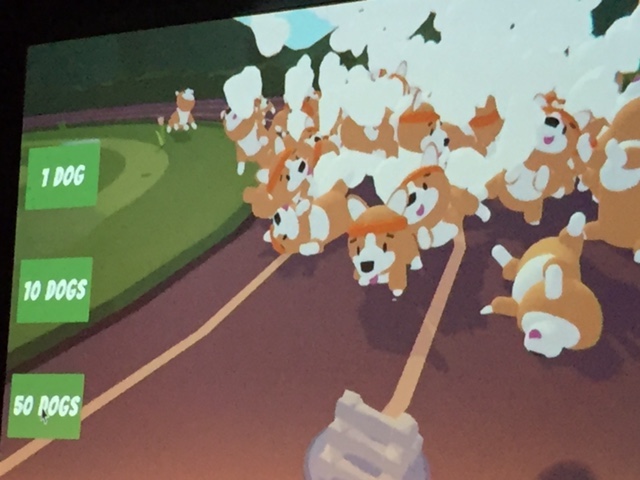

Lange’s next video displayed a rudimentary quadruped, a gangly, eight-jointed figure stumbling and struggling to follow a straight line as it learned to walk. Lange’s ML algorithm had assigned a basic reward function, which is to move from left to right. The quadruped wiggles around for a long time. But eventually bit by bit, through trial and error, it progresses from knowing absolutely nothing to reaching an advanced stage, where it combines these many joints in an efficient pattern, gradually learning to run. What next? Lange’s video showed a cute puppy learning to fetch a stick. One after another, Lange illustrated how these experiments start from tabula rasa and arrive at mastery.

Yes, these were games, and that dog sure was cute, but ever since IBM programmed Deep Blue to beat chess master Garry Kasparov, it hasn’t really been about chess. And Lange’s focus at Unity isn’t truly all about games. It’s more about learning: “Using game engines as a resource for researchers and developers in academia and industry to create aspects of intelligence.” Unity has a toolkit that can be used to teach Artificial General Intelligence (AGI), with broad applications for learning about AI and ML: graphics, physics, cognitive and visual challenges.

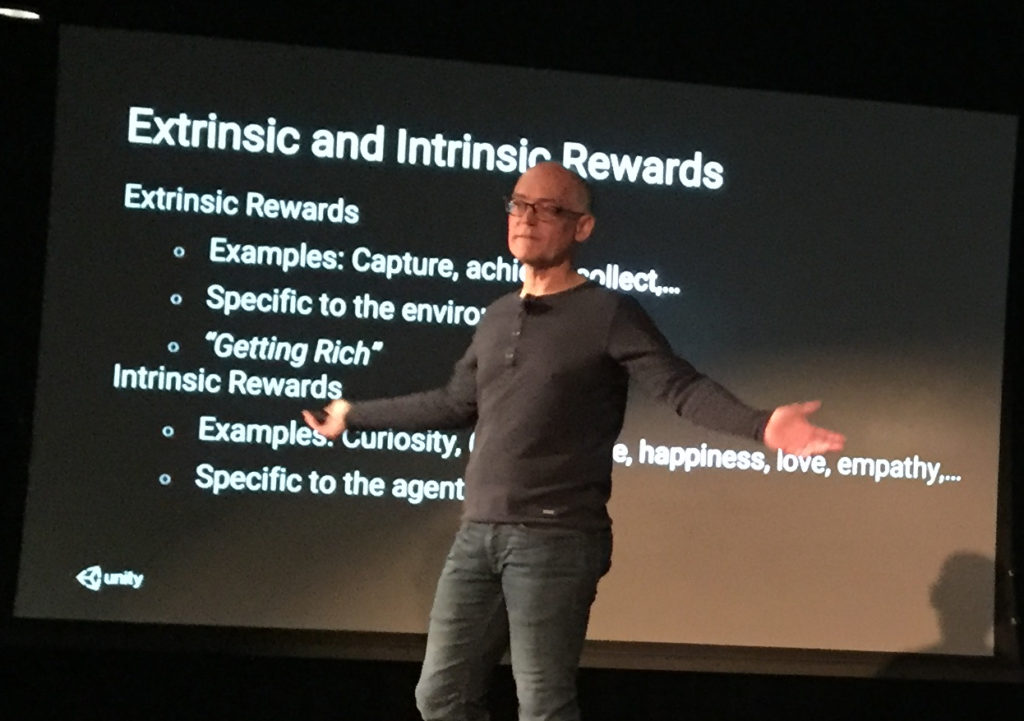

Extrinsic and Intrinsic Rewards

Lange introduced a new concept to the crowd, showing a game in which an agent enters a house full of rooms, moving through the environment in a quest for a push button in a random room. When the agent pushes the button, a pyramid appears. Its task: to knock over the pyramid and grab a gold bar on top of the pyramid. “With simple reward functions,” Lange explained, “you get this emergent behavior.” The game was programmed with a second reward structure that emulates nature, by including both extrinsic rewards like making money and getting rich, and also internal rewards. “They have nothing to do with the environment. It’s things like curiosity, impatience, happiness, love, et cetera. All these traits increase our survivability so they have a purpose.” When you combine the two, he excitedly explained, you can solve some very hard reinforcement learning problems with complex chains of actions. Lange spelled it out for the crowd: “It’s a loop. The loop is very important to me.”

Not all loops are created equal. There’s a fine line between exploring and exploitation, depending on who’s building the algorithm, and for what purpose. Amazon, Google, and just about every other AI-based e-commerce provider have learned to glean your preferences by tracking your clicks, to serve up insidious targeted ads. Alexa famously picked up racist and sexist response patterns not only from the unconscious bias of its programmers, but from drawing on stores of data that perpetuate stereotypes. Content streaming platforms such as Twitter and Facebook maximize addictive behavior by emulating gamification, continually tweaking delivery rates and patterns to keep you hooked in a vicious cycle.

Learning to Work Together

But there’s hope. In his culminating example Lange showed how you can also teach collaboration and teamwork. Up on the screen a simple ball-kicking agent is trying to score a goal on a soccer field. A second agent is added, and the two learn to play together. Trained together, one of them soon starts behaving like a goalie, independently determining that “the best way to protect the goal from scoring is to stand in front of it. And the best way to score is to run sideways up along the line.” The machine has figured out very quickly in this collaborative mode a concept that took humans years to perfect on the soccer pitch – the superior geometry of diagonal passes and widening the field.

Ever the evangelist, Lange points out that AI can be applied to emergent traits such as “language, collaboration and anticipation.” When intelligent agents start cooperating with each other, they begin to develop methods they learn themselves. “Think about how powerful these agents can be,” he says. “Humans went from picking berries in the forest to putting a person on the moon. Just by starting to collaborate.” And sharing was the order of the day at AAI19. The annual event brings together an enthusiastic community of several hundred professionals with open source values who support the development of AI applications across a breadth of sectors, including enterprise, transportation, logistics, health, energy, FinTech, human capital, and cybersecurity. Next week, we’ll cover AI and the future of food, in our third installment of Applied AI. Read our first story here.